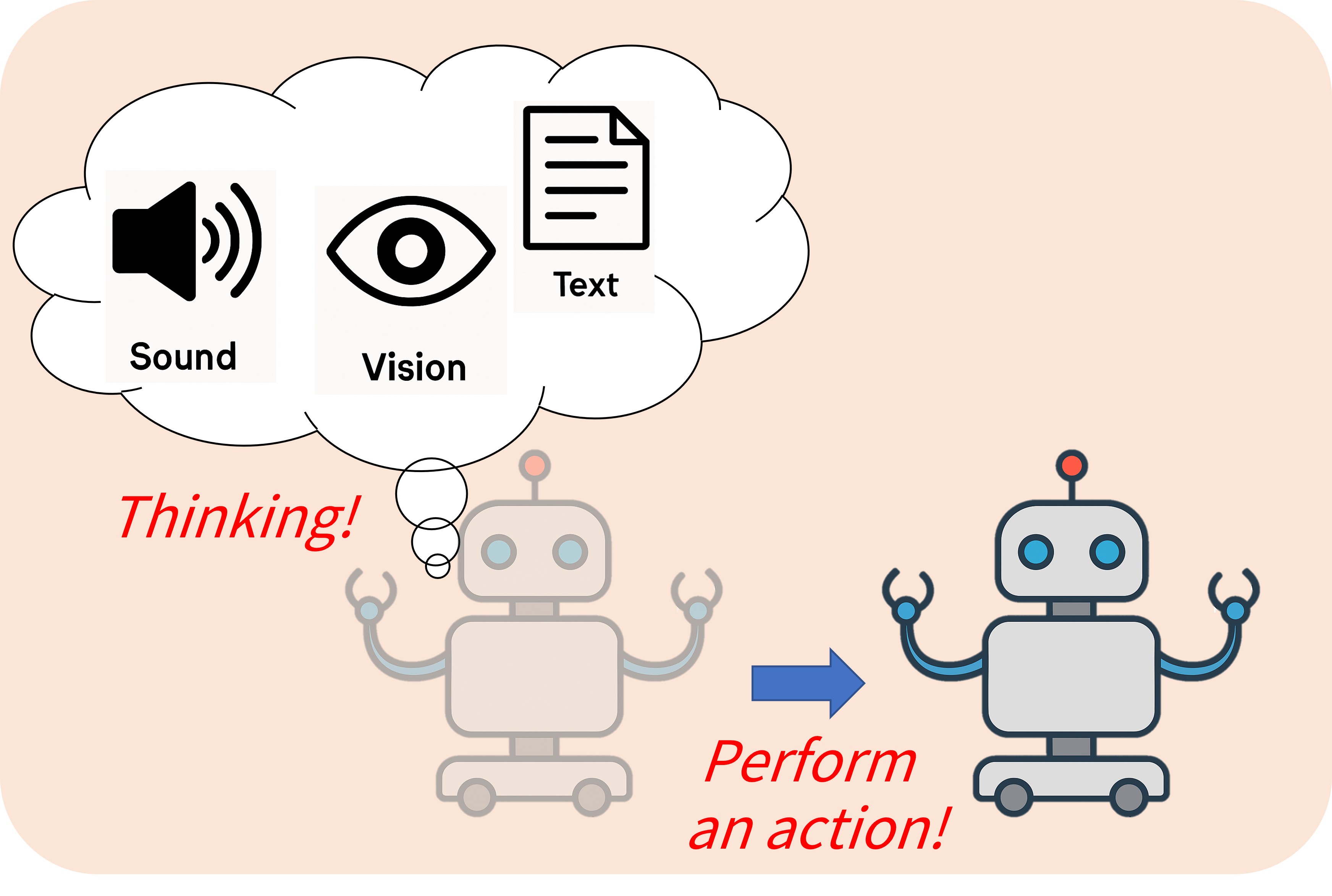

Our research interests include robot audition, sound-aware multimodal LLMs, and sound-based embodied AI. Ultimately, our goal is to enable robots to understand all real-world sensory information (vision, hearing, touch, etc.) and take appropriate actions accordingly.

-

Robot audition encompasses sound source localization, speech separation, automatic speech recognition, and robust operation in complex real-world environments.

-

Sound-aware multimodal LLMs are capable of understanding and reasoning about the world by leveraging auditory and other sensory modalities.

-

Sound-based embodied AI focuses on enabling agents to act based on their understanding and reasoning about the multimodal sensory world.

Robot audition

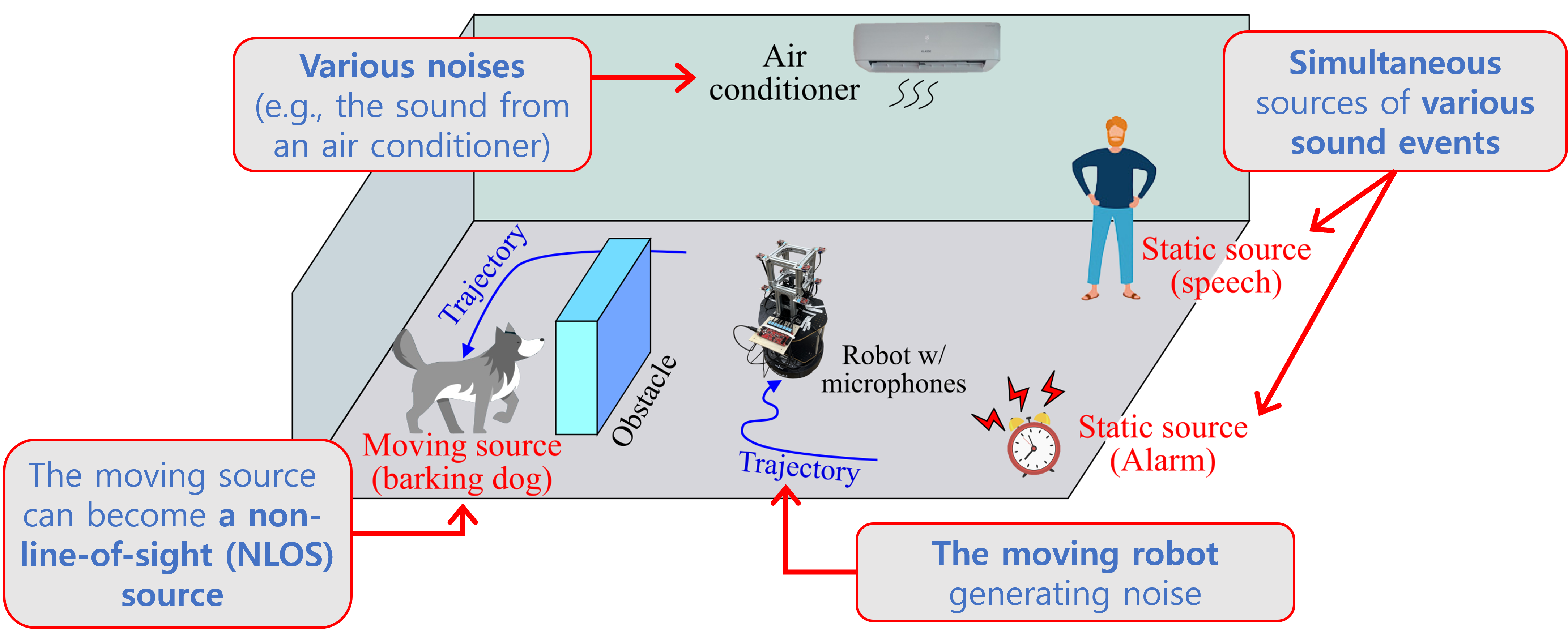

Robot audition refers to the ability of robots to perceive and understand sounds, including key tasks such as sound source localization, speech separation, and automatic speech recognition (ASR). Our research focuses on developing robust robot audition systems that can operate in complex real-world environments, where:

- Multiple types of sounds may occur simultaneously from different sources,

- Both sound sources and the robot itself may be in motion,

- Background noise is present, and

- Sound sources may even be occluded by obstacles, making them invisible to visual sensors.

The goal is to enable robots to accurately interpret auditory information and interact intelligently, even in such dynamic and challenging conditions.

Sound-Aware Robot Intelligence

Robot intelligence has primarily relied on vision-based perception and reasoning. However, many real-world situations cannot be perceived visually alone. For instance:

- Events like a doorbell ringing, an alarm sound, or someone falling can only be detected through sound.

In such cases, auditory information becomes essential for robots to perceive the environment and make appropriate decisions. Our research on Sound-Aware Robot Intelligence (including Sound-aware multimodal LLMs and Sound-based embodied AI) aims to build intelligent systems that leverage sound as a core modality, effectively integrating it with vision, touch, and other sensory inputs to enable multimodal perception, reasoning, and action in real-world scenarios.